COMPUTATIONAL RESEARCH in BOSTON and BEYOND (CRIBB)

Welcome! This is an archive page for a previous or upcoming year of the Computational Research in Boston and Beyond Seminar (CRiBB). To see current seminar information visit the Home Page.

To subscribe to a low-traffic mailing list for announcements related to the forum, please visit the CRiB-list web page.

For more information, e-mail Professor Alan Edelman (edelman AT math.mit.edu) and/or Professor Jeremy Kepner (kepner AT ll.mit.edu).

Organizers : 2025

| Professor Alan Edelman | (MIT - Math & CSAIL) |

| Dr. Chris Hill | (MIT - ORCD & EAPS) |

| Professor Steven G. Johnson | (MIT - Math & RLE) |

| Dr. Jeremy Kepner | (MIT - LL & Math & Connection Science) |

| Dr. Albert Reuther | (MIT - LL) |

Meetings : 2025

Meetings are held on the first Friday of the month from 12:00 PM to 1:00 PM, and will be virtual via ZOOM.

https://mit.zoom.us/j/91933017072 | Meeting ID: 919 3301 7072

| Jan 3 |

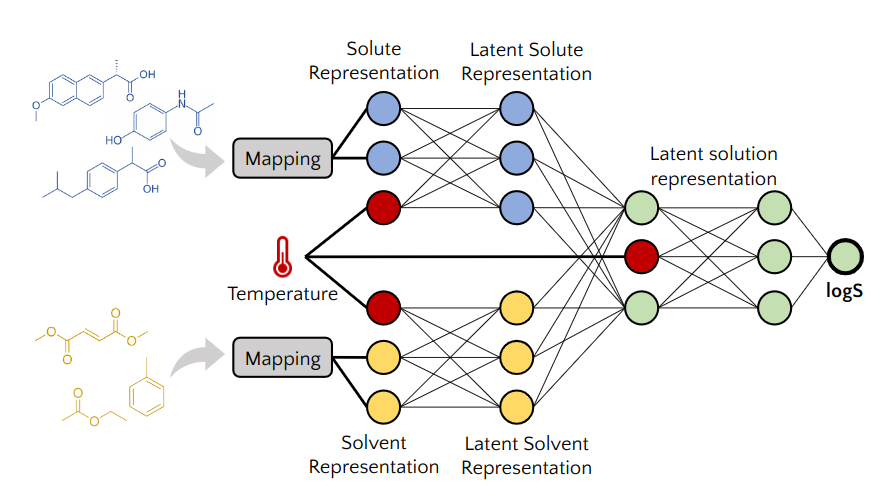

Jackson Burns (MIT) Solid Solubility Prediction at the Limit of Experimental Accuracy Small molecule solubility is a critically important property which affects the efficiency, environmental impact, and phase behavior of synthetic processes. Experimental determination of solubility is a time- and resource-intensive process and existing methods for in silico estimation of solubility are limited by their generality, speed, and accuracy. This work presents two models derived from the fastprop and chemprop architectures and trained on BigSolDB which are capable of predicting solubility at arbitrary temperatures for any small molecule in organic solvent. Both extrapolate to unseen solutes 2-3 times more accurately than the current state-of-the-art model and we demonstrate that they are approaching the aleatoric limit (0.5-1 logS), suggesting that further improvements in prediction accuracy require more accurate datasets. These models, collectively referred to as fastsolv, are open source, freely accessible via a Python package and web interface, highly reproducible, and up to 50 times faster than the next best alternative.

|

| Mar 7 |

Elyssa Hofgard (MIT) Using E(3)-Equivariant Neural Networks (ENNs) to Uncover Symmetry-Implied Missing Information We demonstrate how E(3)-Equivariant Neural Networks (ENNs) can detect symmetry breaking in physical data and reveal hidden symmetry-implied information. Symmetry breaking, whether spontaneous (as in crystalline phase transitions) or induced by external forces, plays a crucial role in our understanding of physical systems. We show that an external input parameter to a fully equivariant model can be used to break symmetry in a physically interpretable way. We apply our approach to altermagnets, a new class of magnetic materials, in order to learn magnetic multipoles. |

| April 4 |

Praneeth Vepakomma (MIT/IDSS and MBZUAI) Extremely-efficient fine-tuning of LLMs Large Language Models (LLMs) have reshaped generative AI, but fully fine-tuning these massive architectures is quite expensive in computational and communication resources. Low-Rank Adaptation (LoRA) partially mitigates these challenges, yet conventional LoRA often struggles to match the performance of full fine-tuning. In this talk, I introduce LoRA-SB (LoRA Silver Bullet), a novel approach that injects a constrained update space into LoRA’s framework, enabling optimal scaling for high-rank gradient directions that mimic full fine-tuning in a low-rank space, and meets the performance of full fine-tuning. We theoretically prove that our initialization strategy provides an optimal low-rank approximation of the initial gradient and preserves critical update directions throughout training. Extensive experiments on mathematical reasoning, commonsense inference, and language understanding tasks show that LoRA-SB exceeds the performance of standard LoRA while requiring 27–90× fewer trainable parameters and comprehensively outperforms LoRA-XS. Our findings demonstrate that it is not only possible but also highly effective to simulate full fine-tuning in low-rank subspaces, offering significant efficiency gains at no loss in accuracy. Additionally, we introduce Fed-SB, a federated extension of LoRA-SB that employs direct averaging of the small matrix R to guarantee exact updates and drastically reduce communication costs—independent of the number of clients—by up to 230×. Fed-SB further enhances privacy-utility-communication efficiency trade-offs by lowering noise requirements and avoiding noise amplification. Overall, it establishes a new Pareto frontier for efficient, scalable federated fine-tuning in both private and non-private settings. |

| June 6 |

Lauren Chua (MIT) A Data-Driven Framework for Predicting Frontal Polymerization from Monomer Structure While thermoset materials offer excellent performance, such as in renewable energy and aerospace applications, they are energy-intensive to make. Only a limited set of monomer chemistries have been identified as amenable to more energy efficient production via frontal polymerization. To learn the governing design principles and optimize for more sustainable high-performance polymer materials, it is critical to link the non-equilibrium process of front polymerization with a given monomer choice. We developed computational pipelines to learn how molecular structure informs the ability of a monomer resin to undergo energy-efficient manufacturing, specifically through frontal ring opening metathesis polymerization (FROMP). In our virtual automated workflow, we combinatorically enumerate candidate monomers and subsequently calculate key reaction parameters from first principles using density functional theory (DFT). We then integrate atomistic chemical insights into a mechanism-based reaction-diffusion model that simulates front propagations. This approach allows us to detail design rules for monomer choice and predict the suitability of a wide range of monomers for FROMP, purely from computation. Overall, our data-driven framework enables rapid processing and screening of new candidates, providing a foundation for in silico evaluation and discovery of FROMP amenable materials. |

| October 3 |

Cleve Moler (Mathworks) Pictures of Matrices In 1988, I gave a talk previewing MATLAB graphics entitled "Pictures of Matrices". At the time, a mathematician friend of mine asked. Why would anyone want pictures of matrices? My response is the graphics demonstrations that we have made over the years. This talk describes a few examples. |

| November 7 |

Steven G. Johnson (MIT) Optimizing physical systems using "unphysical" simulations "Inverse design" is the technique of using large-scale optimization (over thousands or even millions of parameters) to allow the computer to "discover" the best arrangement of materials for a given application, often leading to non-intuitive freeform geometries. In contrast to machine learning, this is typically model-driven optimization rather than data-driven. In particular, the most straightforward way to perform inverse design is to apply optimization to the result of an accurate physical model that directly simulates the desired effect: a "numerical experiment" mirroring (one hopes) the real-world performance. However, we argue that this is often too restrictive: sometimes, vastly better performance is attainable by allowing the computer to exploit "unphysical" models—inaccurate simulations, unphysical materials, and regimes inaccessible to realistic experiments—as long the eventual result (after optimization convergence) returns to the physical realm. We illustrate these ideas with several examples from photonics topology optimization, including incoherent emission, laser optimization, and the design of optical filters. |

| December 5 |

Charles E. Leiserson (MIT) Setting a Course for Post-Moore Software Performance Software performance engineering (SPE) is the science and art of making code run fast or otherwise limiting its consumption of resources, such as power, memory footprint, network utilization, file IO’s, response time, etc. SPE encompasses parallel computing, but it also includes other techniques, such as caching, vectorization, algorithms, bit tricks, loop unrolling, compiler-switch selection, tailoring code to the architecture, exploiting sparsity, changing data representation, metaprogramming, etc. Historically, gains in performance from miniaturization, codified in Moore's Law, relieved programmers from the burden of making software run fast and learning SPE techniques. I will explain why the end of Moore’s Law has made SPE a critical technical skill. Since SPE is neither extensively researched nor widely taught in the universities, however, it risks devolving into an unstructured collection of ad hoc tricks. Now is the time to establish SPE as a science-based discipline, alongside traditional areas of computer science. |

Archives

Acknowledgements

We thank the MIT Department of Mathematics, Student Chapter of SIAM, ORCD, and LLSC for their generous support of this series.